How Veridion Supports Master Data Management

Key Takeaways:

If master data management feels harder than it should, the issue is often not the tools you use, but the quality of the data feeding them.

Duplicate suppliers, outdated records, and inconsistent profiles create blind spots that affect procurement decisions at scale.

So how do you fix that?

In this article, we look at how Veridion supports master data management by addressing data quality issues at their source, and how this approach helps you work with supplier data you can actually trust.

Let’s start with one of the most persistent challenges: duplication.

If you work in a large enterprise, duplicate supplier or third-party records are almost inevitable.

Not because teams are careless, but because supplier data rarely lives in one place.

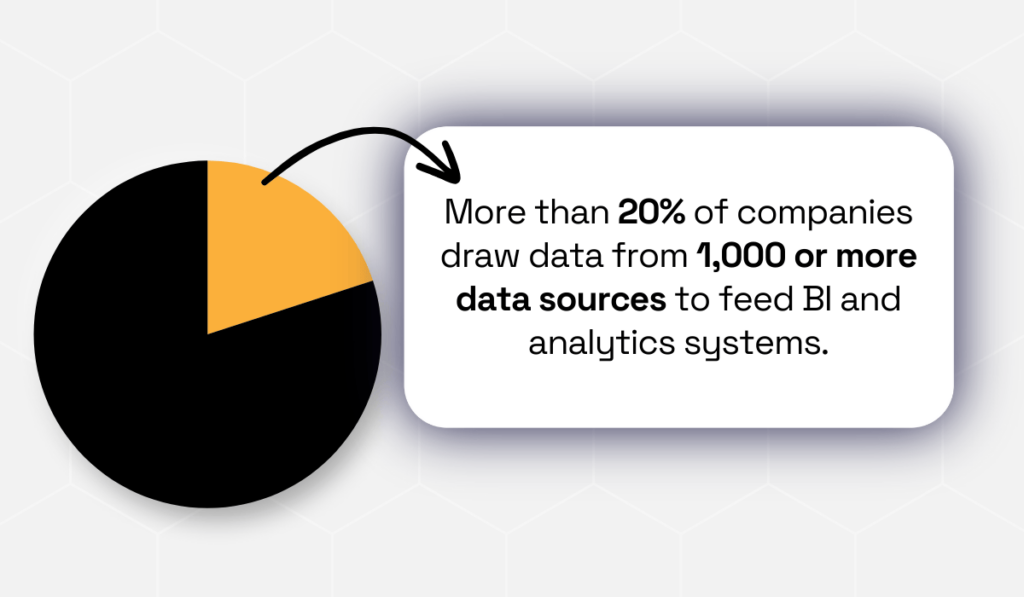

In fact, one market survey found that the average organization manages around 400 separate data sources, with more than 20% pulling data from over 1,000 systems.

Illustration: Veridion / Data: Matillion

Vendor data inevitably lives in many of them—ERPs, sourcing platforms, finance systems, regional tools—often with no single owner or shared standard.

And this is where things start to break down.

When suppliers enter these systems through different channels, duplicates quickly follow.

The same company may appear under slightly different names, addresses, or identifiers depending on the region or source system.

Over time, this fragmentation distorts spend visibility, creates conflicting risk signals, and forces teams into ongoing manual reconciliation.

Without strong data governance, which nearly 40% of organizations still lack, duplicates do not disappear on their own and instead become embedded in daily operations.

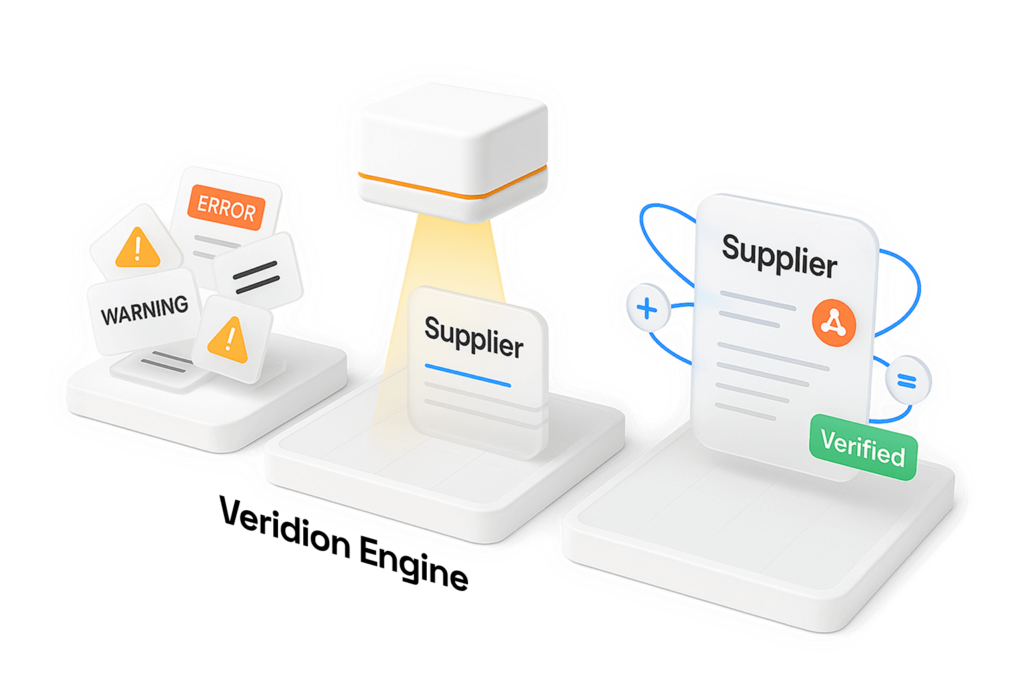

This is the challenge Veridion, our big data platform, addresses at the data layer.

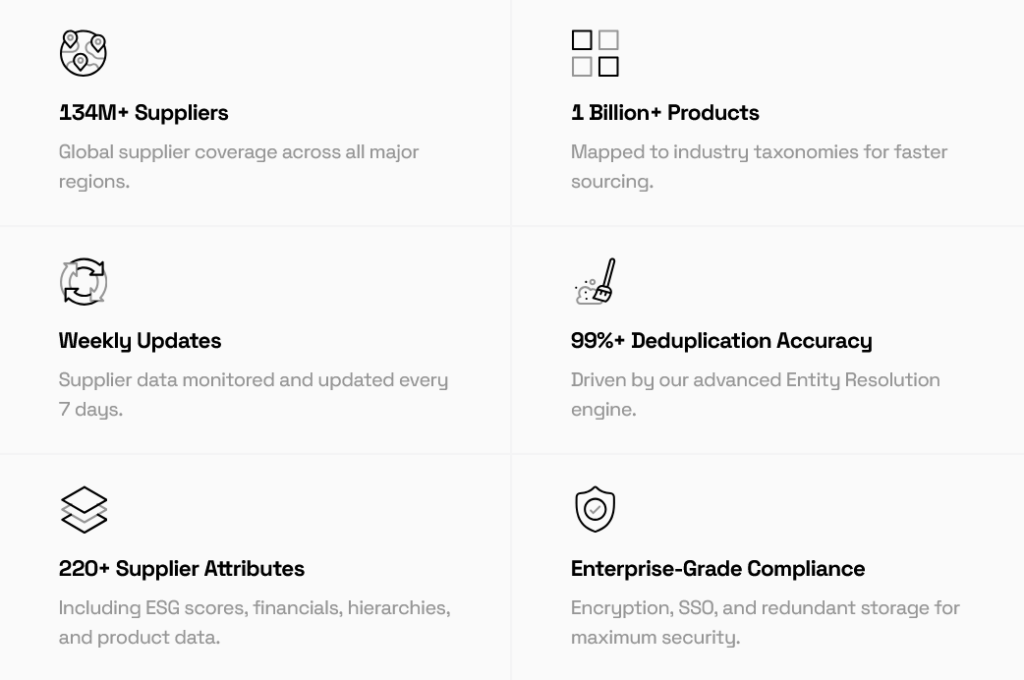

Source: Veridion

Veridion provides a weekly updated global supplier database covering 134 million suppliers and over 1 billion products, enriched with more than 220 data attributes.

This includes firmographics, financials, ESG indicators, and more.

At this scale, deduplication cannot rely on one-time cleanup projects or rigid rules.

Instead, Veridion’s AI-driven matching engine continuously scans supplier and third-party datasets to detect when multiple records represent the same real-world entity.

Veridion applies probabilistic matching, accounting for natural variation in names, locations, and identifiers, rather than expecting perfect consistency from upstream systems.

Source: Veridion

Once identified, those records merge into a single, accurate master profile.

This way, fragmentation is addressed as data flows in, not months later during a cleanup project.

Veridion applies the same principle it uses when aggregating product information across websites:

“We aggregate, deduplicate, and merge product information from multiple pages whenever the same product appears repeatedly across a company’s website, providing a clear, unified view.”

For you, the impact is practical and immediate.

Continuous deduplication reduces reporting inconsistencies, prevents repeated onboarding and compliance checks, and ensures that spend, risk, and performance analysis are built on a single source of truth.

So, instead of managing suppliers across fragmented records, you work with one reliable profile per entity.

And that reliability strengthens every downstream process that depends on your master data.

In practice, supplier master records rarely stop at basic identifiers.

As a procurement professional, you’re expected to work with far richer information:

All of these are essential for informed sourcing, risk assessment, and supplier management.

Here’s the problem: manually enriching this data doesn’t scale.

In many organizations, enrichment depends on a combination of supplier questionnaires, internal research, third-party databases, and periodic refresh projects.

Each step requires time, coordination, and follow-up. By the time the data is collected, validated, and approved, parts of it are already outdated.

At that point, the challenge is no longer effort. It’s accuracy.

This creates a constant cycle of catch-up, where teams invest significant resources just to maintain a baseline level of data quality, even though the data no longer reflects the current supplier reality.

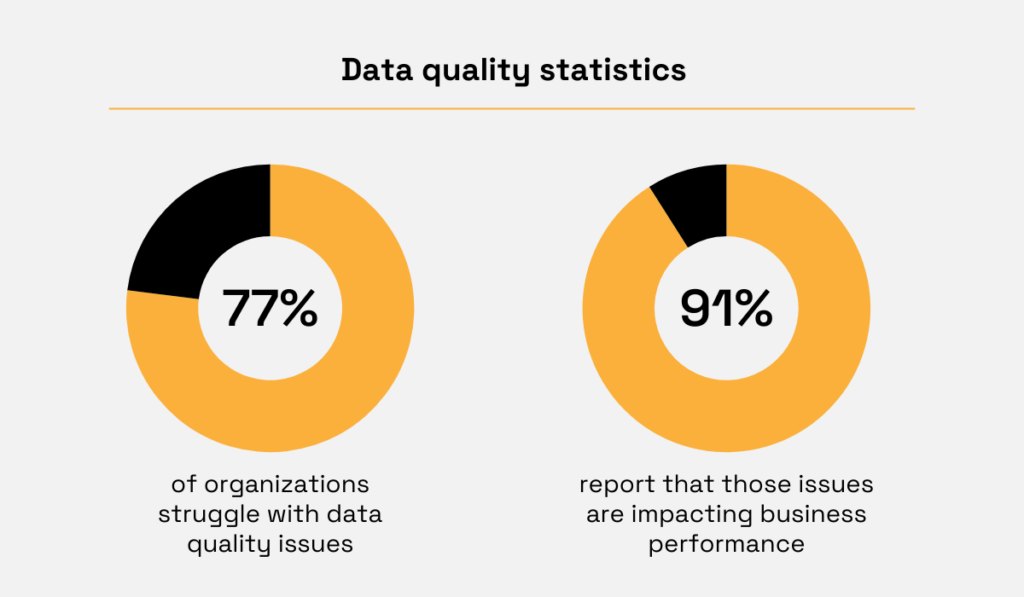

Many are dealing with this challenge.

A survey of 500 data professionals found that 77% of organizations struggle with data quality, and 91% report that these issues are already impacting business performance.

Illustration: Veridion / Data: HPCwire

How does Veridion help?

Rather than treating enrichment as a periodic, manual task, Veridion automates enrichment at scale and embeds it directly into the data flow.

Master data is continuously enriched with firmographic details, financial signals, ESG attributes, and product intelligence as it enters or updates your systems.

So how does this work in practice?

Through Veridion’s Match & Enrich capability, you can submit a company name and country, optionally supplemented with information such as an address, website, registry ID, or phone number, like so:

Source: Veridion

Veridion first normalizes the input and resolves it to the correct legal entity.

It then enriches that entity using multiple continuously updated data signals, returning a single, comprehensive master profile.

Because the matching process relies on probabilistic signals rather than exact matches, it can correctly identify companies even when inputs contain inconsistencies or minor errors.

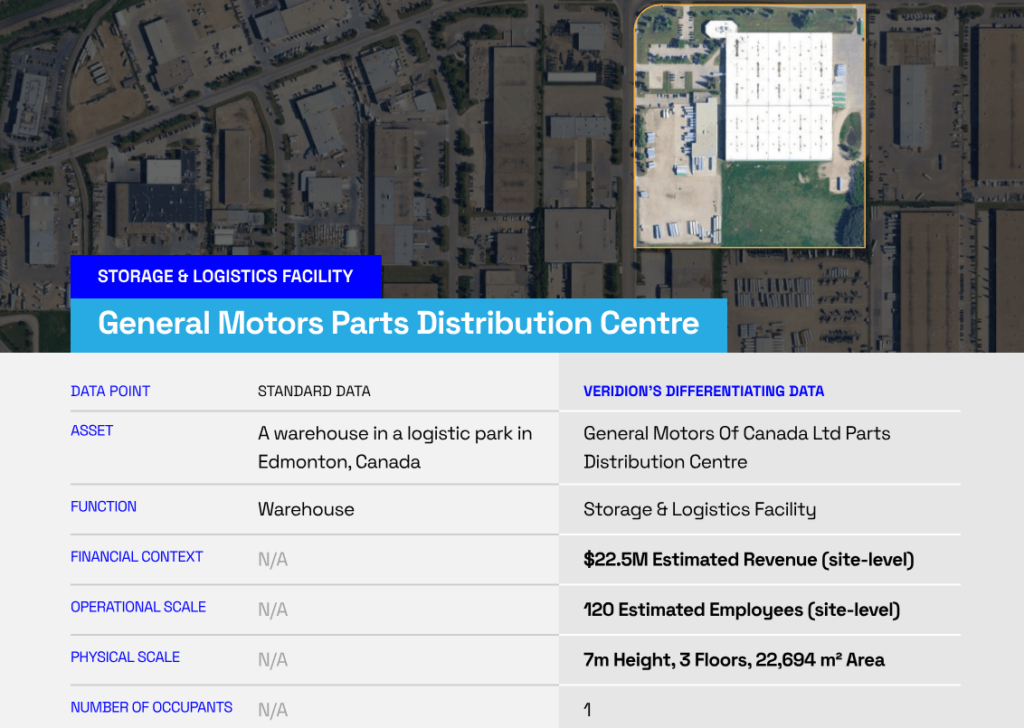

A practical example of Veridion’s depth is enriched location intelligence.

A traditional supplier database might label a site simply as a “warehouse.”

Veridion can go further, distinguishing whether that location is a standard storage facility, a distribution hub, or an R&D center, as in the example below:

Source: Veridion

That distinction matters.

A site that appears to be a generic warehouse may, in reality, be a critical distribution hub supporting nationwide deliveries.

Understanding its true role allows you to assess supplier criticality more accurately, anticipate supply chain vulnerabilities, and prioritize mitigation strategies before disruptions occur.

The result is master data that stays both rich and usable.

With Veridion, you gain deeper supplier context without expanding headcount or relying on batch updates.

As a result, data enrichment becomes something that actively supports daily decision-making rather than slowing it down.

Clean and enriched master data only delivers value if it is structured in a way you can actually use.

Yet in many organizations, supplier classification is still handled manually.

Teams rely on free-text descriptions, inconsistent tags, or one-off categorizations created during onboarding.

Over time, this results in a supplier base that is difficult to segment, analyze, or compare across regions and business units.

And the problem compounds as suppliers change.

As vendors expand into new offerings, enter new markets, or shift their focus, their profiles often remain frozen in outdated categories.

This makes it harder to:

This is where Veridion changes the model.

Rather than relying on static labels, Veridion automatically classifies suppliers using AI-driven models that reflect what companies actually do in the real world.

Classification is derived from observed data, like products, services, operational footprints, ownership structures, and sustainability signals, and applied as structured, standardized attributes within the master data.

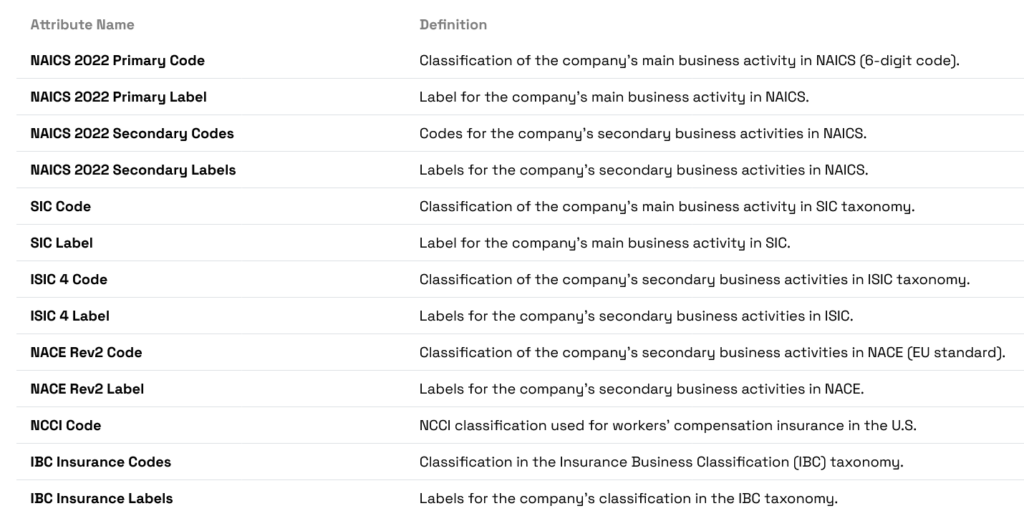

Source: Veridion

At the product and service level, each identified offering is mapped to the United Nations Standard Products and Services Code (UNSPSC), a globally recognized classification system.

This ensures that supplier activities are categorized consistently across regions and systems, without relying on subjective interpretations or inconsistent onboarding inputs.

But classification doesn’t stop there.

Because supplier profiles are enriched with firmographic, geographic, ownership, and ESG data, you can segment suppliers across multiple dimensions using the same continuously updated master record.

For example, you can segment them by:

As suppliers evolve, their classifications update automatically, ensuring that segmentation reflects current reality.

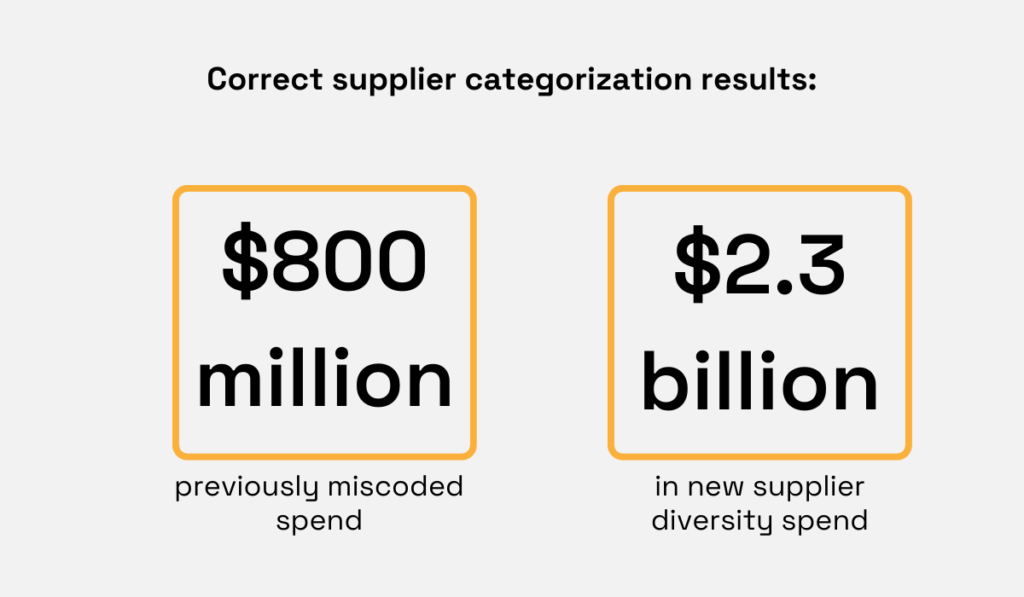

Industry data shows why this matters.

Supplier.io reports that customers who corrected supplier classification uncovered $800 million in previously miscoded spend and identified $2.3 billion in new supplier diversity spend.

Illustration: Veridion / Data: Supplier.io

With Veridion, this structure is built into the data itself.

Manual tagging and re-tagging are eliminated, consistency improves across the entire master data environment, and supplier segmentation becomes reliable rather than reactive.

For you, that means confidence.

You get a master data ecosystem that is cleaner, easier to navigate, and far more actionable for sourcing, risk management, and compliance reporting.

Even the most complete and well-structured master data does not stay accurate on its own.

Supplier information changes constantly.

Companies merge or divest, ownership structures evolve, financial health fluctuates, and regulatory or operational events can occur without warning.

In a large supplier ecosystem with thousands or millions of records, it is simply not realistic for teams to manually track when this information changes.

Without an active way to detect and communicate these changes, master data gradually diverges from reality, and the impact quickly reaches operations and decision-making.

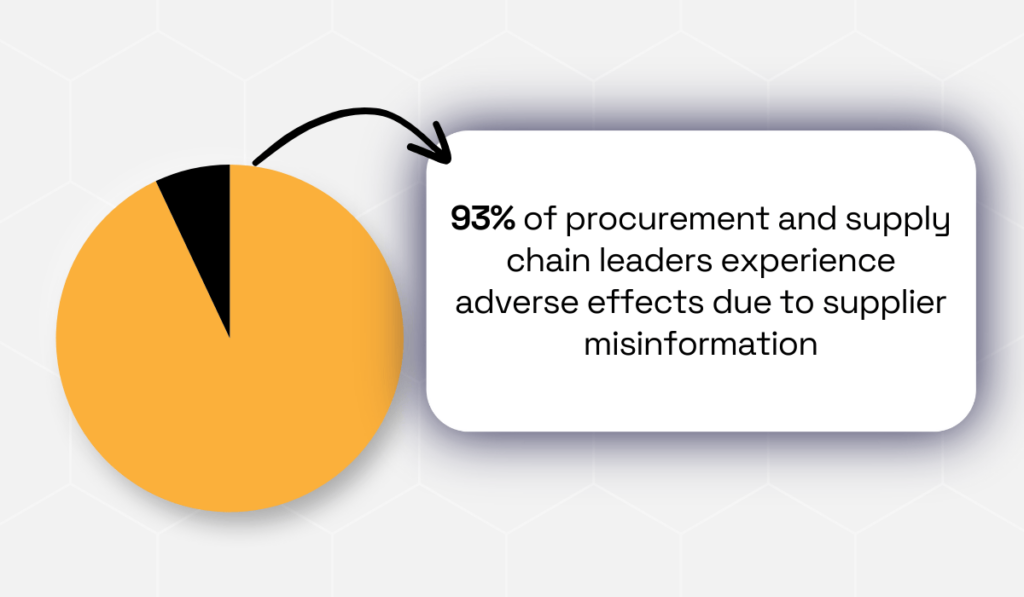

Survey data confirms how significant this risk is.

Namely, 93% of procurement and supply chain leaders report experiencing adverse effects due to misinformation about their suppliers, and nearly half encounter these issues frequently.

Illustration: Veridion / Data: TealBook

The consequences range from missed deadlines and project delays to dissatisfied stakeholders and financial loss, often because teams rely on outdated or incomplete supplier information.

That’s why Matt Palackdharry, Vice President of Sales and Commercial Strategy at TealBook, points out the importance of accurate and up-to-date supplier information:

“Trusted supplier information is the most critical asset a procurement organization can possess. This information is the fuel that powers all procurement technology, it influences billions of dollars of business decisions, and without it, organizations lose the ability to be agile when supply chains become overrun.”

Trusted data depends on two things: keeping information up to date and knowing when something changes.

This is precisely what Veridion provides.

How?

Well, Veridion continuously monitors your supplier base and notifies teams when material changes occur, including ownership updates, financial shifts, regulatory developments, or operational disruptions.

So, instead of discovering issues reactively, you gain early visibility into changes that may affect risk, compliance, or supplier performance.

For example:

However, keep in mind that these alerts do not replace existing risk processes.

They only strengthen them by ensuring master data reflects real-world conditions rather than last month’s snapshot.

Remember:

With Veridion’s help, your supplier records remain accurate, structured, and alive.

And in master data management, that ongoing awareness is what turns data into a dependable foundation for decision-making.

Master data is only as valuable as its accuracy, depth, structure, and timeliness.

Veridion is designed to support all four.

By applying AI and machine learning across a global data foundation covering millions of companies, Veridion helps eliminate duplicate records, continuously enrich supplier profiles, automatically classify vendors, and alert teams when meaningful changes happen.

You get a single, reliable source of truth for supplier master data, which is exactly what effective master data management requires.