What Are the Risks of Using AI in Insurance Underwriting?

Key Takeaways:

What if the biggest risk associated with AI-based insurance underwriting isn’t biased data or black-box algorithms but how confidently underwriters trust it?

AI has quickly become part of everyday underwriting.

From automating risk assessments to accelerating decision-making, these tools promise speed, consistency, and scale.

But beneath the efficiency lies a growing set of risks that aren’t always obvious at first glance.

Models trained on incomplete or outdated data can quietly distort risk profiles.

Automated decisions can mask errors that once would’ve been caught by human judgment.

And overreliance on AI can slowly erode the underwriting expertise that insurers depend on when edge cases arise.

When these issues go unchecked, the impact goes beyond inaccurate pricing.

They expose insurers to regulatory scrutiny, reputational damage, and loss ratios that are hard to explain after the fact.

This guide explains the key risks associated with using AI in insurance underwriting and outlines what underwriters must consider to utilize these tools responsibly, ensuring accuracy and accountability.

Your AI model is only as reliable as the data it is trained on.

And right now, many insurers are feeding their AI systems garbage.

We’re talking about fragmented data, outdated information, and self-reported details that nobody’s bothered to verify.

When your data is flawed, your AI’s risk assessments and pricing will be too.

Assuming you’re using stale firmographic data to train your model, the result will be mispriced policies and exposures you completely missed.

It’s the typical “garbage in, garbage out” problem. Bad input data yields bad underwriting decisions every single time.

The scale of this problem is alarming.

Research from Veridion shows that 45% of insurers’ commercial customers have data inaccuracies in critical areas like business activities and addresses.

Think about that. Nearly half of your customer data could be wrong.

And it gets worse.

According to industry research, 45% of business data provided by applicants is inaccurate, which directly complicates your underwriting decisions.

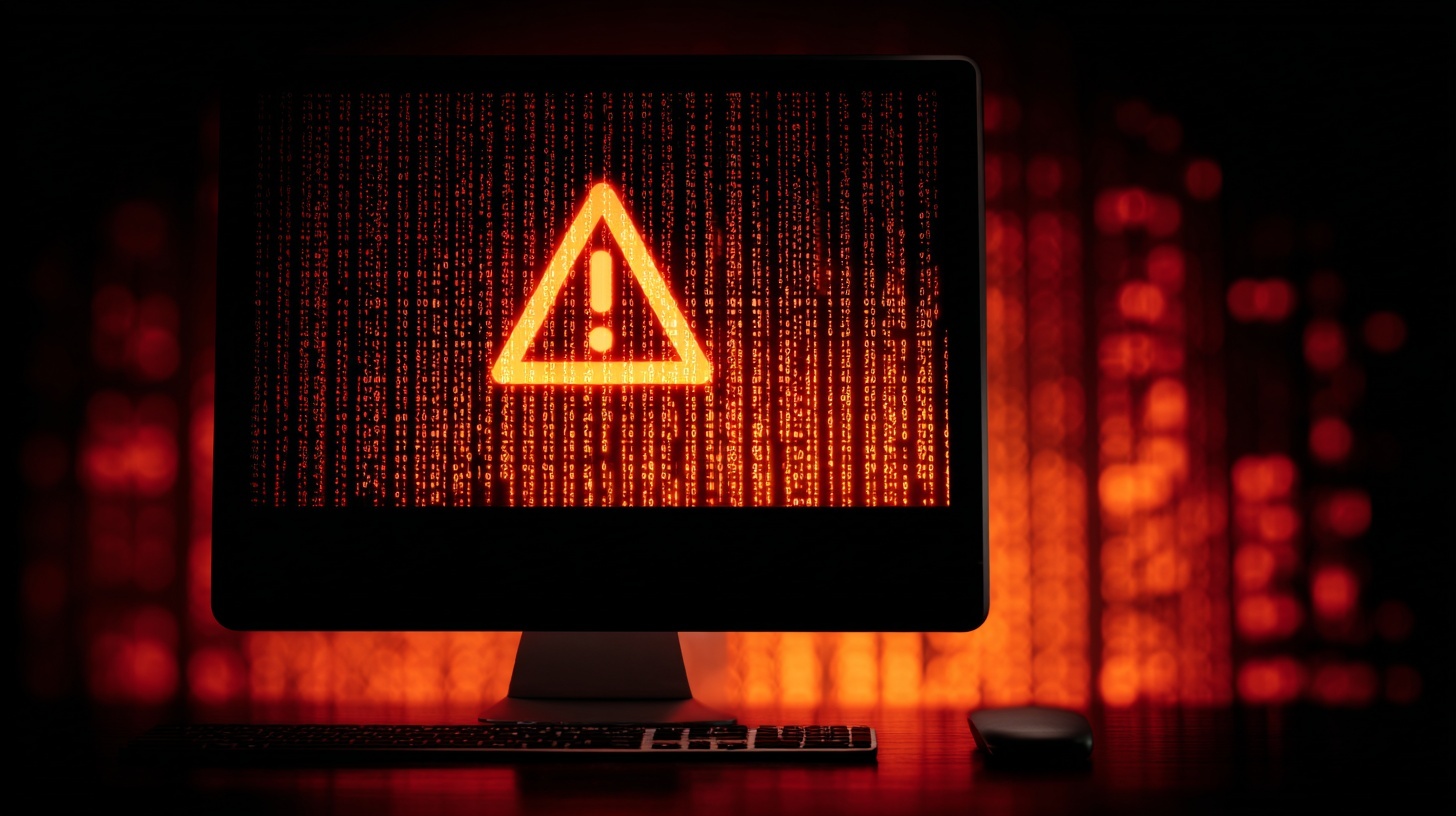

Underwriters are already feeling the pain.

Accenture found that underwriters spend up to 40% of their time on manual data validation.

Illustration: Veridion / Data: Accenture

That’s nearly half their day just checking if the information they have is actually correct.

And all that manual work? It increases the potential for errors.

The pricing implications are significant, too.

Up to 60% of small businesses are misclassified, leading to premium leakage and incorrect pricing.

So what’s the solution?

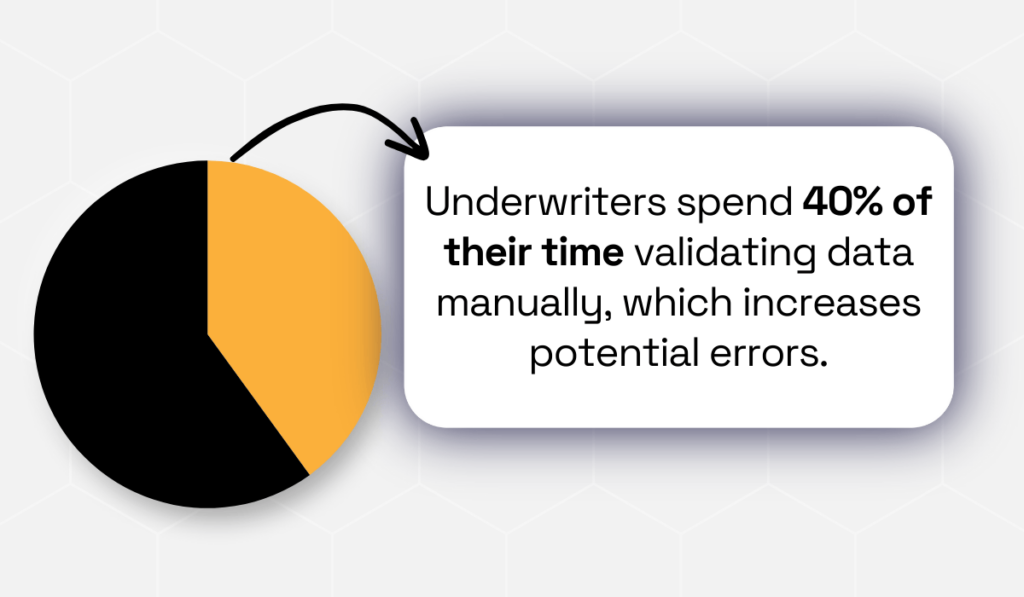

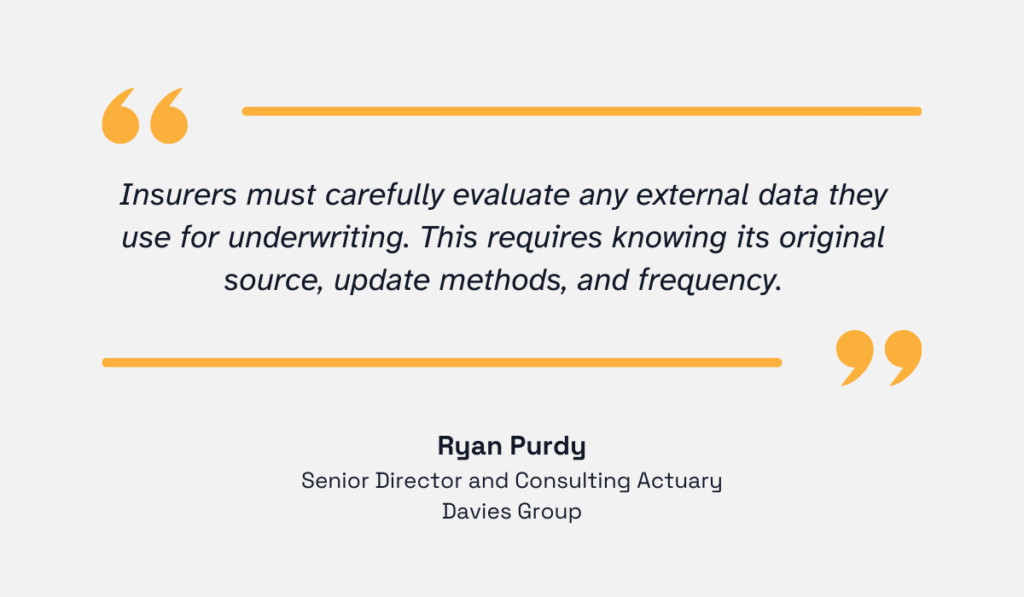

Ryan Purdy, Senior Director and Consulting Actuary at Davies Group, a tech and professional services firm, offers a starting point:

Illustration: Veridion / Quote: Risk Management Magazine

You need to integrate high-quality external data sources and enforce strong data governance.

Here’s how to do it:

Enrich your existing data.

Use APIs or data platforms to match and update each company’s profile: legal status, employee count, revenue, locations, and more.

Trusted providers like Veridion use AI to aggregate, verify, and continuously update business profiles.

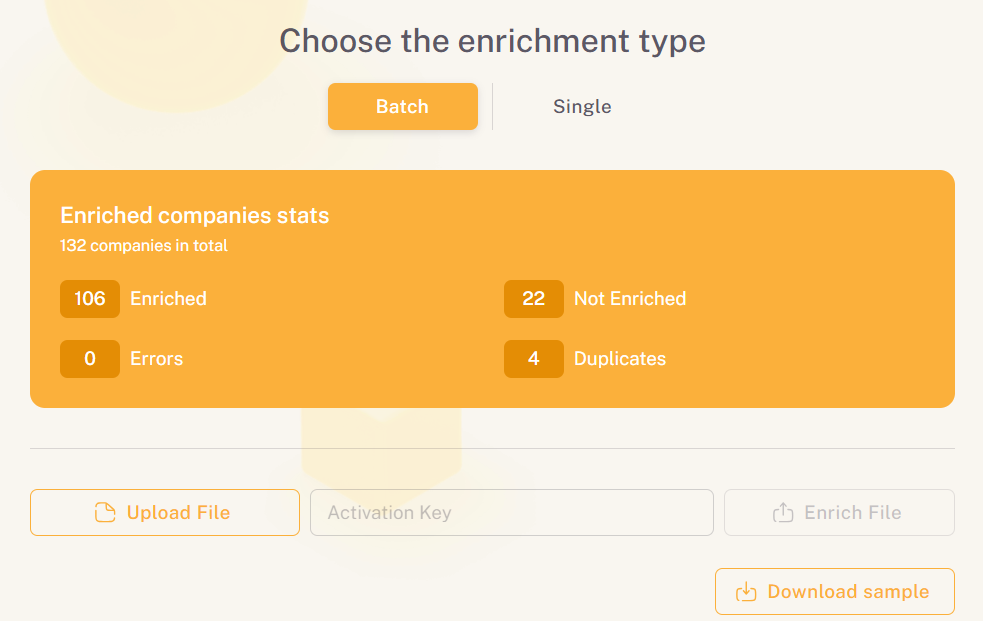

Source: Veridion

The platform maintains an extensive database of 130+ million business profiles and adds hundreds of new attributes per company weekly.

This fills gaps and corrects errors in your own data before they poison your AI models.

Veridion also cleans and deduplicates your inputs, normalizing company names and addresses so inconsistent entries are eliminated.

These accurate, unified records ultimately prevent you from evaluating one risk multiple times or overlooking it entirely.

Industry leaders are already seeing the benefits of platforms like Veridion.

Jasper Li, CEO of SortSpoke, an AI-powered intelligent document processing (IDP) platform, explains the value of combining AI capabilities with Veridion’s quality data:

Source: Veridion

Other companies have unlocked significant benefits thanks to Veridion, too.

Mahavir Chand, Co-founder of Insillion, an insurtech company providing low-code platforms for insurance product creation, highlights how quality data improves their entire workflow:

Source: Veridion

All in all, when you proactively validate and enrich your data, you help ensure your AI model sees the true risk profile.

High-quality data translates to more accurate models, fairer pricing, and confidence that your AI recommendations aren’t built on flawed inputs.

And that’s the foundation everything else is built on.

If your data reflects past biases, your AI will too. And that’s a serious problem.

Think about it this way: historical underwriting and claims data might under- or overrepresent certain businesses based on size, location, or ownership.

When your AI learns from this skewed data, it starts making unfair decisions, such as pricing risks unfairly or excluding businesses that should actually qualify.

Peter Wood, an Entrepreneur and Chief Technology Officer at Spectrum Search, a tech recruitment firm, explains it plainly:

Illustration: Veridion / Quote: Risk Management Magazine

The consequences?

You’re looking at regulatory violations, ethical issues, and damage to your reputation.

Hence, AI underwriting tools must not produce discriminatory results.

Bryan Barrett, Regional Underwriting Manager at Munich Re Specialty, warns that some organizations are already seeing the fallout:

Illustration: Veridion / Quote: Munich Re Specialty

The legal questions are particularly problematic.

Carolyn Rosenberg, Partner at the international law firm Reed Smith LLP, highlights the concerns regulators are raising:

Illustration: Veridion / Quote: Risk and Insurance

Some questions that arise here, Rosenberg adds, are who’s actually making a claim decision and for what purpose, and what’s the role of technology vs human judgment.

Here’s the bottom line: even one error can be catastrophic.

Ray Ash, Executive VP at Westfield Specialty Insurance, explains why the stakes are so high:

“For one thing, because we are such a heavily regulated industry, the financial penalties and reputational damage that could result if AI goes rogue are too great, it’s not worth the risk. And that would destroy the trust within a client relationship that likely took years to build.”

So how do you overcome bias?

Treat your AI models with the same rigor you apply to financial models.

First, audit regularly.

Test your AI outputs across different business segments. Are certain industries consistently getting higher rates for no clear reason?

Use fairness-check tools and explainable AI frameworks to catch these issues. Run scenarios with recent data to make sure your predictions stay consistent across different groups.

This is ongoing work.

It all ultimately comes down to how the AI is trained, carefully validating its performance before deployment and continuously monitoring and refining it over time.

Second, diversify your training data.

Don’t let your AI learn from a narrow slice of history. Enrich your datasets with examples from varied geographies, industries, and risk levels.

Make sure no group is left out or underrepresented, and resist the temptation to overfit to quirks in your legacy data.

Third, enforce AI governance and transparency.

Document everything.

How was each model developed? What data sources did it use? What factors does it consider?

Create clear policies, assign oversight roles, and develop simple explanations of how AI influences your decisions.

And keep humans in the loop. Don’t blindly accept every suggestion your AI makes.

For borderline or complex cases, your underwriters need to review and potentially override AI outputs when something doesn’t look right.

Overall, AI risks largely stem from poor governance and training, since models perform only as well as the standards used to build them.

Ethical, well-governed training enables broader use, while improper or unlicensed data can trigger legal issues and reputational harm.

When you combine regular bias audits with various training data and strict oversight, you protect yourself from perpetuating historical prejudices, reduce regulatory risk, and ensure your AI use aligns with fairness expectations.

Insurance is one of the most regulated industries out there.

That means your AI-powered underwriting has to fit within a complex web of laws. And regulators worldwide are watching closely.

They’re demanding fairness, transparency, and consumer protection.

Take the EU, for example.

The new EU AI Act classifies underwriting algorithms as “high-risk,” which triggers strict requirements.

Existing regulations already apply: you must avoid algorithmic discrimination under the EU Charter of Fundamental Rights and GDPR.

The Insurance Distribution Directive mandates that AI-influenced products must be transparent and serve the customer’s best interest.

Additionally, in the U.S., many states have adopted NAIC guidance to oversee AI in insurance.

New York’s Department of Financial Services has particularly strict rules.

In July 2024, they enacted Circular Letter No. 7, which sets standards for the use of AI in underwriting with the aim of ensuring fairness and transparency.

You need robust oversight of any AI used for underwriting and pricing. And you must explain how AI factors into your decisions.

Robert Tomilson, Member in Clark Hill’s Insurance and Reinsurance practice, offers some practical advice:

Illustration: Veridion / Quote: Risk and Insurance

The cost of non-compliance? Legal penalties, fines, or being forced to redesign your AI entirely.

So, here’s how to mitigate compliance risk:

Build regulation into your AI program from day one.

First, get legal and compliance teams involved early.

They should review your AI use cases and ensure you’re aligned with evolving rules.

GDPR, for instance, effectively requires human review or explanation of significant insurance decisions.

Don’t wait until you’re facing a violation to bring these teams in.

Second, choose or develop explainable models.

If you can interpret your model line-by-line, you’ll have an easier time meeting transparency requirements.

Document how it works and keep detailed logs. When regulators come knocking, you’ll be ready.

Third, establish a formal governance framework.

Follow emerging best practices from regulators: maintain inventories of all your AI models, conduct risk assessments, and set up oversight committees.

Define policies for AI use, assign roles for monitoring, and schedule regular reviews.

Nora Benanti, SVP and Head of Claims Analytics at Arch Capital Group, a leading global insurer, explains why diligent monitoring matters:

Illustration: Veridion / Quote: Risk and Insurance

Fourth, stay engaged with regulators and industry groups.

Rules are changing. Be prepared to adapt as they do.

The future might hold even more possibilities.

As Ash, whom we mentioned earlier, suggests, we could train an AI to understand our risk metrics and acceptable ranges, much like medical reference values.

This would allow it to support underwriters by accelerating and refining risk assessments.

The goal is more than just efficiency.

It’s building an AI underwriting system that meets all fairness and legal standards as they evolve.

Integrate compliance into your AI process from the start and explain your models proactively.

Involve regulators early and maintain clear documentation.

When you do this right, you’ll avoid regulatory pitfalls while driving the efficiency gains you’re looking for.

Your AI systems are hungry for data.

They consume massive volumes of sensitive information: financials, health records, property details, and more.

And that makes them prime targets for cyberattacks.

If your AI pipeline or data store gets compromised, you’ll face a nightmare scenario. Regulatory fines like GDPR penalties, loss of client trust, and potential lawsuits.

And the reality is: insurers are falling behind on cybersecurity.

One SecurityScorecard report found that insurance carriers are disproportionately affected by third-party breaches.

Although they made up about 27% of the total survey sample, they represented 50% of the companies hit by third-party cybersecurity incidents.

No wonder companies are now demanding more transparency from their insurers.

Jeremy Stevens, VP of EMEA & APAC at Xceedance, a global insurtech firm and consulting company, explains:

Illustration: Veridion / Quote: Risk Management Magazine

And insurers must be ready with answers, Stevens adds.

They should provide detailed documentation or reports that outline the factors and data inputs considered by their AI models in order to help clients understand the rationale behind decisions.

So how do you protect yourself and ensure you keep your clients’ trust?

Implement strong data security and governance.

Start with encryption and access control.

Encrypt sensitive data both in transit and at rest. Use strict role-based access so only authorized staff or systems can query your underwriting datasets.

Next, manage your vendors carefully.

Any third-party data provider must meet high security standards. Conduct thorough due diligence. Require your partners to perform cyber insurance or undergo regular audits.

Build privacy into your design.

Anonymize or pseudonymize personal data wherever possible.

Collect only the minimum data you need and get clear consent. If you’re collaborating on aggregated datasets, employ techniques like differential privacy.

Monitor continuously and prepare for incidents.

Watch your AI systems for unusual access patterns.

Have an incident-response plan ready to isolate and contain breaches quickly. Regularly update and patch your AI tools to close vulnerabilities before attackers find them.

Stay compliant with data laws.

GDPR is just the baseline.

New AI-focused regulations are emerging, and some jurisdictions will require human review of automated decisions. Make sure you demonstrate meaningful human oversight.

When you treat data security as a core part of your AI strategy, you protect your underwriting business from devastating breaches.

Strong encryption, strict access controls, and robust third-party audits will keep customer data safe while letting you capture AI’s benefits.

AI is fast. It can analyze data and recommend underwriting actions faster than any human.

But speed doesn’t equal wisdom.

When you put too much trust in automated outputs, you open yourself up to serious risk.

AI systems can miss context and nuance that a seasoned underwriter would catch immediately. This is especially true for complex or non-standard accounts.

For instance, your model flags a business as low-risk based purely on the data.

Meanwhile, a human underwriter knows about recent management changes or a looming lawsuit that completely changes the risk profile.

If you let AI make fully autonomous decisions, you risk mispricing coverage or missing critical exclusions.

AI should be a support tool, not a replacement for human judgment.

Even as AI gets smarter, it can’t eliminate humans from the underwriting process, especially for complex risks.

Here’s how to leverage AI safely:

| Keep your underwriters in the loop | Use AI to provide insights or initial scores, but require human review for final decisions on significant policies |

| Formalize your human-in-the-loop process | Create “stop points” where AI output goes back to a person before proceeding. This ensures expertise is applied exactly where it’s needed most. |

| Demand explainable outputs | Use models that give interpretable reasons for their suggestions. If an AI assigns a high risk score, you should know which factors drove it |

Most importantly, guard against automation bias.

This is imperative. Train your staff to treat AI as support in their decision-making process, not an actual decision-maker.

John Kain, Head of Worldwide Financial Services Market Development at Amazon Web Services, agrees:

Illustration: Veridion / Quote: Risk and Insurance

Without careful guardrails, your team can become acceptors of AI recommendations rather than critical thinkers.

Demand periodic audits of AI-driven approvals to catch any drift and retrain underwriters regularly to critically assess AI flags.

The goal isn’t to replace humans, but to enhance what they do.

AI has real limitations that you need to acknowledge.

By treating AI as a decision-support system, you harness its speed while retaining human expertise.

This hybrid model (data-driven insight plus seasoned judgment) produces better underwriting results.

If AI sometimes feels like a double-edged sword in underwriting, that’s because it is.

The risks, such as poor quality data, model bias, compliance risk, data privacy concerns, and overreliance on automation, are real.

But they don’t mean underwriters should step away from AI altogether.

By understanding how models are built, questioning outputs instead of accepting them at face value, maintaining strong data governance, and keeping humans in the loop, you can stay in control.

When AI is used as a decision support tool, rather than a decision maker, it strengthens underwriting discipline rather than weakening it.

This helps you balance speed with sound risk judgment.